In this article, we will learn:

- What is MapReduce

- Few interesting facts about MapReduce

- MapReduce component and architecture

- How MapReduce works in Hadoop

MapReduce:

MapReduce is a programming model which is used to process large data sets in a batch processing manner.A MapReduce program is composed of

- a Map() procedure that performs filtering and sorting (such as sorting students by first name into queues, one queue for each name)

- and a Reduce() procedure that performs a summary operation (such as counting the number of students in each queue, yielding name frequencies).

Few Important Facts about MapReduce:

- Apache Hadoop Map-Reduce is an open source implementation of Google's Map Reduce Framework.

- Although there are so many map-reduce implementation like Dryad from Microsoft, Dicso from Nokia which have been developed for distributed systems but Hadoop being the most popular among them offering open source implementation of Map-reduce framework.

- Hadoop Map-Reduce framework works on Master/Slave architecture.

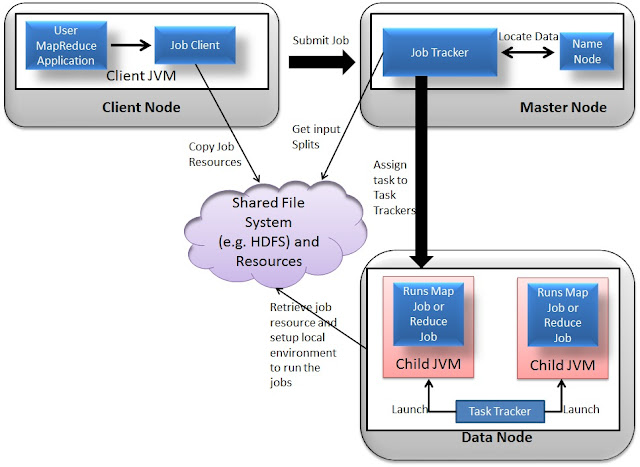

MapReduce Architecture:

Hadoop 1.x MapReduce is composed of two components.

- Job tracker playing the role of master and runs on MasterNode (Namenode)

- Task tracker playing the role of slave per data node and runs on Datanodes

Job Tracker:

- Job Tracker is the one to which client application submit mapreduce programs(jobs).

- Job Tracker schedule clients jobs and allocates task to the slave task trackers that are running on individual worker machines(date nodes).

- Job tracker manage overall execution of Map-Reduce job.

- Job tracker manages the resources of the cluster like:

- Manage the data nodes i.e. task tracker.

- To keep track of the consumed and available resource.

- To keep track of already running task, to provide fault-tolerance for task etc.

Task Tracker:

- Each Task Tracker is responsible to execute and manage the individual tasks assigned by Job Tracker.

- Task Tracker also handles the data motion between the map and reduce phases.

- One Prime responsibility of Task Tracker is to constantly communicate with the Job Tracker the status of the Task.

- If the JobTracker fails to receive a heartbeat from a TaskTracker within a specified amount of time, it will assume the TaskTracker has crashed and will resubmit the corresponding tasks to other nodes in the cluster.

How MapReduce Engine Works:

The Let us understand how exactly map reduce program gets executed in Hadoop. What is the relationship between different entities involved in this whole process.

The entire process can be listed as follows:

- Client applications submit jobs to the JobTracker.

- The JobTracker talks to the NameNode to determine the location of the data

- The JobTracker locates TaskTracker nodes with available slots at or near the data

- The JobTracker submits the work to the chosen TaskTracker nodes.

- The TaskTracker nodes are monitored. If they do not submit heartbeat signals often enough, they are deemed to have failed and the work is scheduled on a different TaskTracker.

- A TaskTracker will notify the JobTracker when a task fails. The JobTracker decides what to do then: it may resubmit the job elsewhere, it may mark that specific record as something to avoid, and it may may even blacklist the TaskTracker as unreliable.

- When the work is completed, the JobTracker updates its status.

- Client applications can poll the JobTracker for information.

Let us see these steps in more details.

1. Client submits MapReduce job to Job Tracker:

Whenever client/user submit map-reduce jobs, it goes straightaway to Job tracker. Client program contains all information like the map, combine and reduce function, input and output path of the data.

2. Job Tracker Manage and Control Job:

- The JobTracker puts the job in a queue of pending jobs and then executes them on a FCFS(first come first serve) basis.

- The Job Tracker first determine the number of split from the input path and assign different map and reduce tasks to each TaskTracker in the cluster. There will be one map task for each split.

- Job tracker talks to the NameNode to determine the location of the data i.e. to determine the datanode which contains the data.

3. Task Assignment to Task Tracker by Job Tracker:

- The task tracker is pre-configured with a number of slots which indicates that how many task(in number) Task Tracker can accept. For example, a TaskTracker may be able to run two map tasks and two reduce tasks simultaneously.

- When the job tracker tries to schedule a task, it looks for an empty slot in the TaskTracker running on the same server which hosts the datanode where the data for that task resides. If not found, it looks for the machine in the same rack. There is no consideration of system load during this allocation.

4. Task Execution by Task Tracker:

- Now when the Task is assigned to Task Tracker, Task tracker creates local environment to run the Task.

- Task Tracker need the resources to run the job. Hence it copies any files needed from the distributed cache by the application to the local disk, localize all the job Jars by copying it from shared File system to Task Tracker's file system.

- Task Tracker can also spawn multiple JVMs to handle many map or reduce tasks in parallel.

- TaskTracker actually initiates the Map or Reduce tasks and reports progress back to the JobTracker.

5. Send notification to Job Tracker:

- When all the map tasks are done by different task tracker they will notify the Job Tracker. Job Tracker then ask the selected Task Trackers to do the Reduce Phase

6. Task recovery in failover situation:

- Although there is single TaskTracker on each node, Task Tracker spawns off a separate Java Virtual Machine process to prevent the TaskTracker itself from failing if the running job(process) crashes the JVM due to some bugs defined in user written map reduce function

7. Monitor Task Tracker :

- The TaskTracker nodes are monitored. A heartbeat is sent from the TaskTracker to the JobTracker every few minutes to check its status.

- If Task Tracker do not submit heartbeat signals often enough, they are deemed to have failed and the work is scheduled on a different TaskTracker.

- A TaskTracker will notify the JobTracker when a task fails. The JobTracker decides what to do then: it may resubmit the job elsewhere, it may mark that specific record as something to avoid, and it may even blacklist the TaskTracker as unreliable.

8. Job Completion:

- When the work is completed, the JobTracker updates its status.

- Client applications can poll the JobTracker for information.

No comments:

Post a Comment